Surface Elevation

Applying Noise to a Mesh

- Modify vertex positions of a generated mesh.

- Use noise to determine elevation.

- Recalculate normal and tangent vectors.

This is the first tutorial in a series about pseudorandom surfaces. It takes what we made in the Pseudorandom Noise and Procedural Meshes series and uses it to create a mesh with varying elevation.

This tutorial is made with Unity 2020.3.35f1.

Project Setup

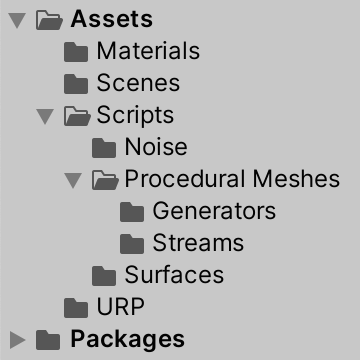

This series directly follows the series about pseudorandom noise and procedural meshes. We'll use what we created in those series to generate pseudorandom surfaces. So we'll copy what we need from the final tutorials of those series.

Procedural Meshes

We use a copy of the Icosphere tutorial as our starting point. Either use your own project or download the tutorial's repository. Keep everything in that project, except for the scene and code assets used for the quad example, which can be removed.

Rename ProceduralMesh to ProceduralSurface and let's put it in a Surface folder to keep it separate. Rename the scene and adjust the game object in it to match this change.

public class ProceduralSurface : MonoBehaviour { … }

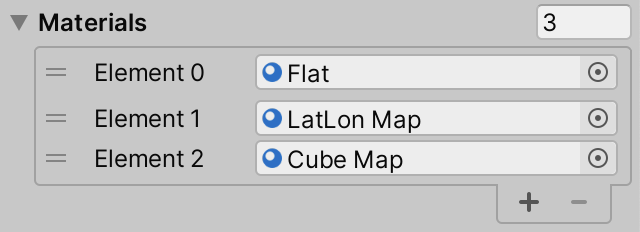

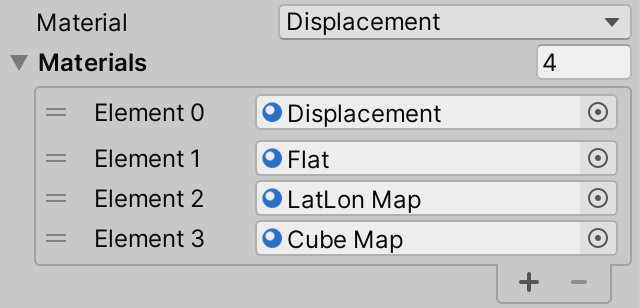

Remove the Procedural Mesh shader graph and the ProceduralMesh HLSL asset that it relied on. Remove the Ripple material and change the Flat and LatLon Map materials to use the Universal Render Pipeline / Lit shader instead. Also remove Ripple from the ProceduralSurface.MaterialMode enum and the materials configuration.

//public enum MaterialMode { Flat, Ripple, LatLonMap, CubeMap }public enum MaterialMode { Flat, LatLonMap, CubeMap }

Noise

We're also going to use noise, so copy the code from the Simplex Noise tutorial and put it in a Noise folder. Include all Noise code assets, MathExtensions, and SpaceTRS. Exclude the assets dealing with noise visualization and shapes from it.

Modifying Vertices

The goal of this tutorial is to create a mesh surface that gets displaced by pseudorandom noise, baked into the mesh. We'll do this by first generating a base mesh via an existing mesh job and then displacing its vertices with noise, for which we'll create a new surface job.

Surface Job

Create a new SurfaceJob in the Surfaces folder. Make it a standard IJobFor implementation, which modifies an array of positions, initially without any vectorization. We begin with a simple job that would turn a flat surface into a wedge, by making the Y coordinates equal to the absolute of the X coordinates.

using Unity.Burst;

using Unity.Collections;

using Unity.Jobs;

using Unity.Mathematics;

using UnityEngine;

using static Unity.Mathematics.math;

[BurstCompile(FloatPrecision.Standard, FloatMode.Fast, CompileSynchronously = true)]

public struct SurfaceJob : IJobFor {

NativeArray<float3> positions;

public void Execute (int i) {

float3 p = positions[i];

p.y = abs(p.x);

positions[i] = p;

}

}

Also give it a static ScheduleParallel method, which naively invokes GetVetexData<float3> on some mesh data to supply the positions to the job.

public static JobHandle ScheduleParallel (

Mesh.MeshData meshData, int resolution, JobHandle dependency

) => new SurfaceJob() {

positions = meshData.GetVertexData<float3>()

}.ScheduleParallel(meshData.vertexCount, resolution, dependency);

Currently ProceduralSurface.GenerateMesh only generates the configure mesh shape. To also modify its vertices we can run SurfaceJob directly after generating the base mesh.

jobs[(int)meshType](mesh, meshData, resolution, default).Complete(); SurfaceJob.ScheduleParallel(meshData, resolution, default).Complete();

We don't need to complete both jobs explicitly, we can chain them by passing the handle of the mesh job to the surface job as a dependency. This way we only have a single point where we have to wait for completion.

//jobs[(int)meshType](mesh, meshData, resolution, default).Complete();SurfaceJob.ScheduleParallel( meshData, resolution, jobs[(int)meshType](mesh, meshData, resolution, default) ).Complete();

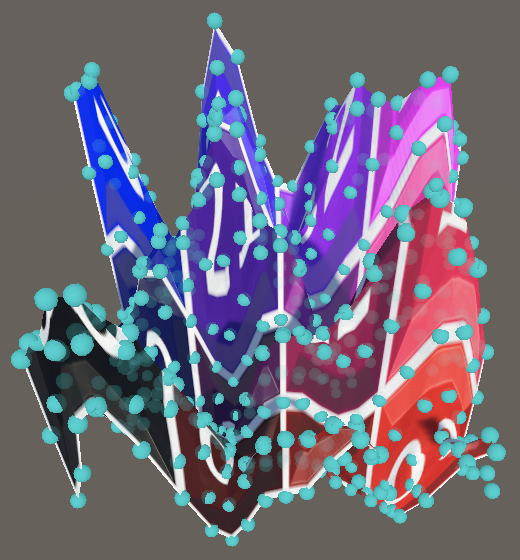

Let's try this for the shared square grid.

This naive approach doesn't work. The shared square grid uses SingleStream to manage its vertex data, which contains more than just positions in a single vertex stream. The job runs without throwing an error because the SingleStream.Stream0 struct contains twelve floats, so it always aligns with a multiple of float3. But those float3 values cut the stream into portions that cover all vertex data, not just the positions. Thus the jobs ends up modifying only a portion of the data, changing positions, normals, tangents, and UV coordinates.

The quickest way to fix this issue is to change the ProceduralSurface.jobs array so that the shared square grid uses MultiStream instead. That way the first vertex stream only contains float3 position data and SurfaceJob correctly interprets that data.

MeshJob<SharedSquareGrid, MultiStream>.ScheduleParallel,

Vertex Data

Although switching to MultiStream works, we shouldn't make our mesh less efficient to render just to make our job run without issues. We should instead adjust our approach to work with efficient mesh data. So let's switch back to using SingleStream.

MeshJob<SharedSquareGrid, SingleStream>.ScheduleParallel,

What we'll do instead is adjust our job to retrieve the correct vertex data. Begin by making the SingleStream.Stream0 type publicly accessible.

public struct SingleStream : IMeshStreams {

[StructLayout(LayoutKind.Sequential)]

public struct Stream0 {

public float3 position, normal;

public float4 tangent;

public float2 texCoord0;

}

…

}

Now we can adjust SurfaceJob so it retrieves the vertex data in the correct format. It now has to retrieve the position from the vertex data in Execute instead of directly working with an array of position.

using ProceduralMeshes.Streams;

using static Unity.Mathematics.math;

[BurstCompile(FloatPrecision.Standard, FloatMode.Fast, CompileSynchronously = true)]

public struct SurfaceJob : IJobFor {

NativeArray<SingleStream.Stream0> vertices;

public void Execute (int i) {

SingleStream.Stream0 v = vertices[i];

float3 p = v.position;

p.y = abs(p.x);

v.position = p;

vertices[i] = v;

}

public static JobHandle ScheduleParallel (

Mesh.MeshData meshData, int resolution, JobHandle dependency

) => new SurfaceJob() {

vertices = meshData.GetVertexData<SingleStream.Stream0>()

}.ScheduleParallel(meshData.vertexCount, resolution, dependency);

}

We could come up with a way to support multiple stream formats, but to keep things simple for now we'll exclusively work with SingleStream. To make sure that all mesh shapes are supported change all entries in the jobs array to use SingleStream, even though some of the spheres only use positions.

Vectorization

Our noise code is designed for vectorization, so SurfaceJob should be vectorized as well. This means that it needs to work on four vertices at once. Give it a Vertex4 struct to package four vertices and use that for the vertices array, dividing the job count by four. Then adjust all four positions in Execute instead of one.

struct Vertex4 {

public SingleStream.Stream0 v0, v1, v2, v3;

}

NativeArray<Vertex4> vertices;

public void Execute (int i) {

Vertex4 v = vertices[i];

v.v0.position.y = abs(v.v0.position.x);

v.v1.position.y = abs(v.v1.position.x);

v.v2.position.y = abs(v.v2.position.x);

v.v3.position.y = abs(v.v3.position.x);

vertices[i] = v;

}

public static JobHandle ScheduleParallel (

Mesh.MeshData meshData, int resolution, JobHandle dependency

) => new SurfaceJob() {

vertices =

meshData.GetVertexData<SingleStream.Stream0>().Reinterpret<Vertex4>(12 * 4)

}.ScheduleParallel(meshData.vertexCount / 4, resolution, dependency);

This only works if the vertex count is divisible by four, so a resolution 6 shared square grid doesn't work, but a resolution 5 grid does.

Of course the point of vectorization is to vectorize the actual work done. We can use the same approach that we used in Noise.Job: putting all positions in a 3×4 matrix and transposing it to a 4×3 matrix. Then we can vectorize the absolute operation, after which we have to extract the Y coordinates to update the separate positions. In this case that generates a lot of overhead, but it will be worth it once we start calculating complex noise.

float4x3 p = transpose(float3x4( v.v0.position, v.v1.position, v.v2.position, v.v3.position )); p.c1 = abs(p.c0); v.v0.position.y = p.c1.x; v.v1.position.y = p.c1.y; v.v2.position.y = p.c1.z; v.v3.position.y = p.c1.w;

Modifying Vertex Count

As mentioned before, vectorization is only possible when the vertex count is a multiple of four, but the meshes generated by MeshJob don't guarantee this.

We can add support for vectorization to MeshJob by adding an optional boolean parameter that is false by default to its ScheduleParallel method to indicate whether support for vectorization is needed. We'll adjust the vertex count based on this parameter. Initially just store the vertex count in a variable before configuring the streams.

public static JobHandle ScheduleParallel (

Mesh mesh, Mesh.MeshData meshData, int resolution, JobHandle dependency,

bool supportVectorization = false

) {

var job = new MeshJob<G, S>();

job.generator.Resolution = resolution;

int vertexCount = job.generator.VertexCount;

job.streams.Setup(

meshData,

mesh.bounds = job.generator.Bounds,

vertexCount,

job.generator.IndexCount

);

return job.ScheduleParallel(

job.generator.JobLength, 1, dependency

);

}

However, delegate types cannot handle optional parameters, they need explicit parameter lists. So to keep the existing code working the parameter cannot be optional so instead we add a version without the parameter that forwards to the other one.

public static JobHandle ScheduleParallel ( Mesh mesh, Mesh.MeshData meshData, int resolution, JobHandle dependency ) => ScheduleParallel(mesh, meshData, resolution, dependency, false); public static JobHandle ScheduleParallel ( Mesh mesh, Mesh.MeshData meshData, int resolution, JobHandle dependency, bool supportVectorization//= false) { … }

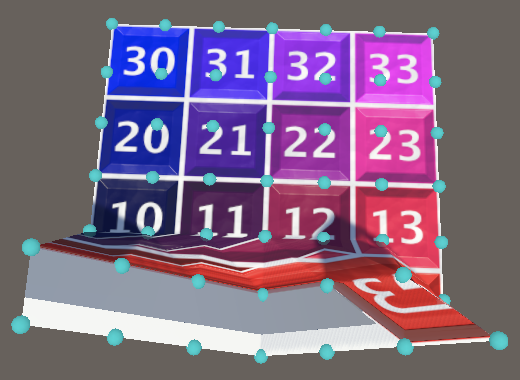

If vectorization must be supported and the vertex count is not a multiple four, then we need to add more vertices. This is the case when the two least-significant bits of the vertex count are not zero, because that's the remainder of an integer division by four. If so, add four vertices minus that. Thus we end up with at most three extra unused vertices, which is an insignificant bit of extra data.

int vertexCount = job.generator.VertexCount;

if (supportVectorization && (vertexCount & 0b11) != 0) {

vertexCount += 4 - (vertexCount & 0b11);

}

Now add a second delegate type that includes the extra parameter. Let's name it AdvancedMeshJobScheduleDelegate because it contains more options.

public delegate JobHandle MeshJobScheduleDelegate ( Mesh mesh, Mesh.MeshData meshData, int resolution, JobHandle dependency ); public delegate JobHandle AdvancedMeshJobScheduleDelegate ( Mesh mesh, Mesh.MeshData meshData, int resolution, JobHandle dependency, bool supportVectorization );

Change the ProceduralSurface.jobs array so it contains the advanced delegates.

static AdvancedMeshJobScheduleDelegate[] jobs = { … };

And indicate that we need support for vectorization when scheduling the job in GenerateMesh.

SurfaceJob.ScheduleParallel( meshData, resolution, jobs[(int)meshType]( mesh, meshData, resolution, default, true ) ).Complete();

Now our code should work with all mesh types and resolutions. Note that if we generate a sphere it will be flattened, becoming a two-sided disk.

Modiying Bounds

Like we modify the vertex count, we should also be able to modify the mesh bounds, because we will be displacing vertices. We'll do this by also adding a parameter for extra bounds extents to MeshJob.ScheduleParallel. It is a Vector3 parameter with the zero vector as its default, which we add to the extents of the bounds. This allows us to increase the mesh bounds depending on how we modify it.

public struct MeshJob<G, S> : IJobFor

where G : struct, IMeshGenerator

where S : struct, IMeshStreams {

…

public static JobHandle ScheduleParallel (

Mesh mesh, Mesh.MeshData meshData, int resolution, JobHandle dependency

) =>

ScheduleParallel(mesh, meshData, resolution, dependency, Vector3.zero, false);

public static JobHandle ScheduleParallel (

Mesh mesh, Mesh.MeshData meshData, int resolution, JobHandle dependency,

Vector3 extraBoundsExtents, bool supportVectorization

) {

…

Bounds bounds = job.generator.Bounds€;

bounds.extents += extraBoundsExtents;

job.streams.Setup(

meshData,

mesh.bounds = bounds,

vertexCount,

job.generator.IndexCount

);

…

}

}

…

public delegate JobHandle AdvancedMeshJobScheduleDelegate (

Mesh mesh, Mesh.MeshData meshData, int resolution, JobHandle dependency,

Vector3 extraBoundsExtents, bool supportVectorization

);

We assume that we're modifying a flat surface by displacing vertices either up or down by at most one unit. Thus we'll pass the up vector as the extra extents in ProceduralSurface.GenerateMesh. This would change the bounds of the shared square grid from (1,0,1) to (1,2,1).

jobs[(int)meshType]( mesh, meshData, resolution, default, Vector3.up, true )

Applying Noise

Now that everything functions correctly the next step is to use noise to vertically displace the vertices. Add a generic INoise struct type parameter to SurfaceJob and give it a field for noise settings, initially simply using its default configuration.

using static Unity.Mathematics.math;

using static Noise;

[BurstCompile(FloatPrecision.Standard, FloatMode.Fast, CompileSynchronously = true)]

public struct SurfaceJob<N> : IJobFor where N : struct, INoise

…

Settings settings;

…

public static JobHandle ScheduleParallel (

Mesh.MeshData meshData, int resolution, JobHandle dependency

) => new SurfaceJob<N>() {

vertices =

meshData.GetVertexData<SingleStream.Stream0>().Reinterpret<Vertex4>(12 * 4),

settings = Settings.Default

}.ScheduleParallel(meshData.vertexCount / 4, resolution, dependency);

}

Then copy the code that generates fractal noise from Noise.Job.Execute, base it on the vectorized vertex positions, and use the result for the final Y coordinates.

//p.c1 = abs(p.c0);var hash = SmallXXHash4.Seed(settings.seed); int frequency = settings.frequency; float amplitude = 1f, amplitudeSum = 0f; float4 sum = 0f; for (int o = 0; o < settings.octaves; o++) { sum += amplitude * default(N).GetNoise4(p, hash + o, frequency); amplitudeSum += amplitude; frequency *= settings.lacunarity; amplitude *= settings.persistence; } float4 noise = sum / amplitudeSum; v.v0.position.y = noise.x; v.v1.position.y = noise.y; v.v2.position.y = noise.z; v.v3.position.y = noise.w;

Then invoke an explicit noise version of SurfaceJob in ProceduralSurface.GenerateMesh. In this tutorial we'll limit ourselves to normal 2D Perlin noise.

using static Noise;

[RequireComponent(typeof(MeshFilter), typeof(MeshRenderer))]

public class ProceduralSurface : MonoBehaviour {

…

void GenerateMesh () {

…

SurfaceJob<Lattice2D<LatticeNormal, Perlin>>.ScheduleParallel(

meshData, resolution,

jobs[(int)meshType](

mesh, meshData, resolution, default, Vector3.up, true

)

).Complete();

…

}

}

Noise Configuration

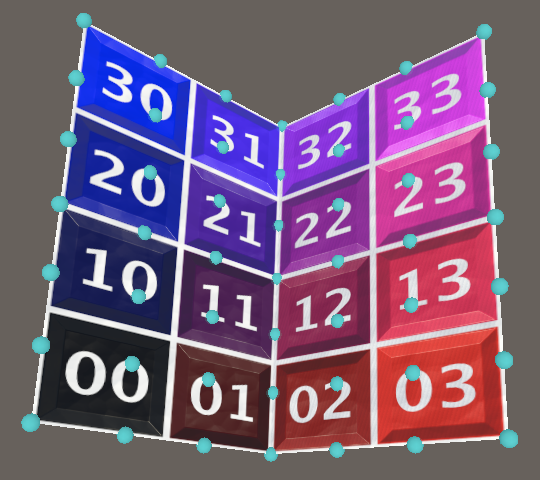

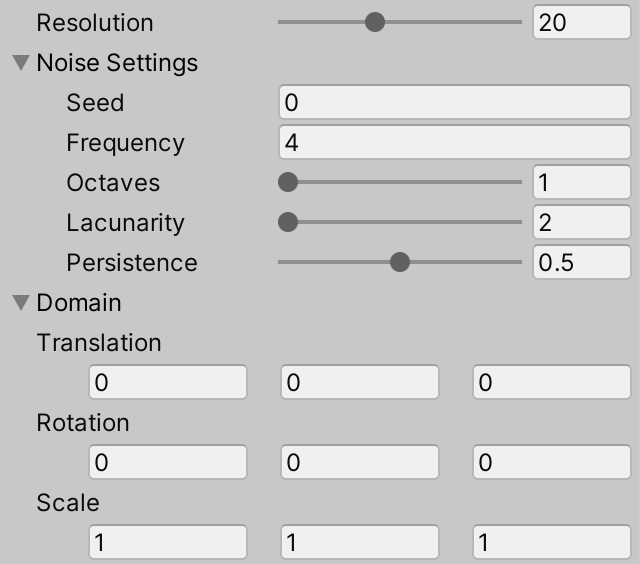

Let's make the settings and domain of the noise configurable, just like we did for the noise visualization. Add configuration fields for noise settings and a domain to ProceduralSurface, in this case with the scale set to 1.

[SerializeField, Range(1, 50)]

int resolution = 1;

[SerializeField]

Settings noiseSettings = Settings.Default;

[SerializeField]

SpaceTRS domain = new SpaceTRS {

scale = 1f

};

Pass both to the surface job when scheduling it.

SurfaceJob<Lattice2D<LatticeNormal, Perlin>>.ScheduleParallel( meshData, resolution, noiseSettings, domain, jobs[(int)meshType]( mesh, meshData, resolution, default, Vector3.up, true ) ).Complete();

And add the required parameters for both to SurfaceJob.ScheduleParallel. Also add a field for the domain TRS matrix and use it to transform the input positions in Execute.

float3x4 domainTRS;

public void Execute (int i) {

Vertex4 v = vertices[i];

float4x3 p = domainTRS.TransformVectors(transpose(float3x4(

v.v0.position, v.v1.position, v.v2.position, v.v3.position

)));

…

}

public static JobHandle ScheduleParallel (

Mesh.MeshData meshData, int resolution, Settings settings, SpaceTRS domain,

JobHandle dependency

) => new SurfaceJob<N>() {

vertices =

meshData.GetVertexData<SingleStream.Stream0>().Reinterpret<Vertex4>(12 * 4),

settings = settings,

domainTRS = domain.Matrix

}.ScheduleParallel(meshData.vertexCount / 4, resolution, dependency);

Surface Displacement

In the procedural noise series we also made the amount of displacement configurable. Let's do this here as well, adding another field and parameter to control the maximum displacement, using it to scale the final noise.

float displacement;

public void Execute (int i) {

…

float4 noise = sum / amplitudeSum;

noise *= displacement;

…

}

public static JobHandle ScheduleParallel (

Mesh.MeshData meshData, int resolution, Settings settings, SpaceTRS domain,

float displacement,

JobHandle dependency

) => new SurfaceJob<N>() {

vertices =

meshData.GetVertexData<SingleStream.Stream0>().Reinterpret<Vertex4>(12 * 4),

settings = settings,

domainTRS = domain.Matrix,

displacement = displacement

}.ScheduleParallel(meshData.vertexCount / 4, resolution, dependency);

Add a configuration option for it to ProceduralSurface, with a −1–1 range and a default of ½. Adjust the extra extents to match the maximum displacement.

[SerializeField, Range(1, 50)]

int resolution = 1;

[SerializeField, Range(-1f, 1f)]

float displacement = 0.5f;

…

void GenerateMesh () {

…

SurfaceJob<Lattice2D<LatticeNormal, Perlin>>.ScheduleParallel(

meshData, resolution, noiseSettings, domain, displacement,

jobs[(int)meshType](

mesh, meshData, resolution, default,

new Vector3(0f, Mathf.Abs(displacement)), true

)

).Complete();

…

}

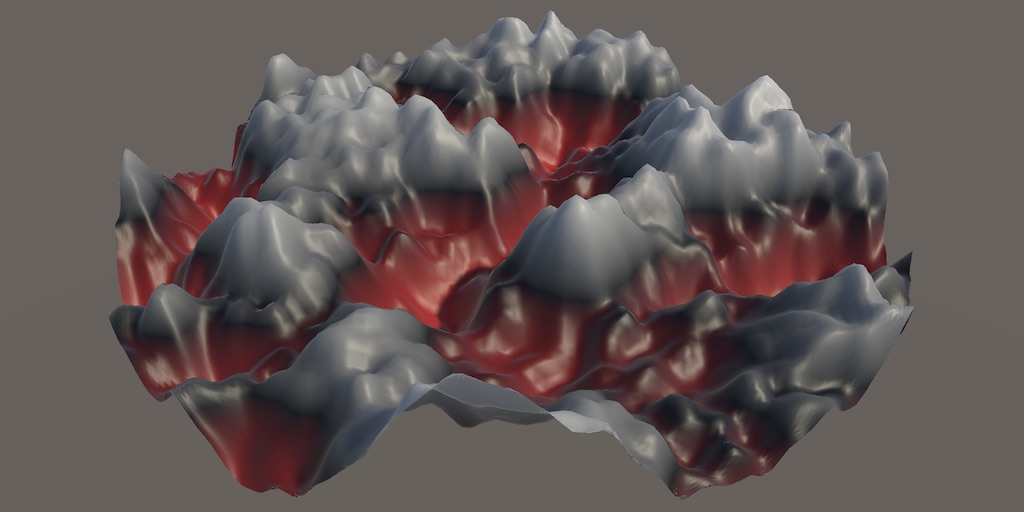

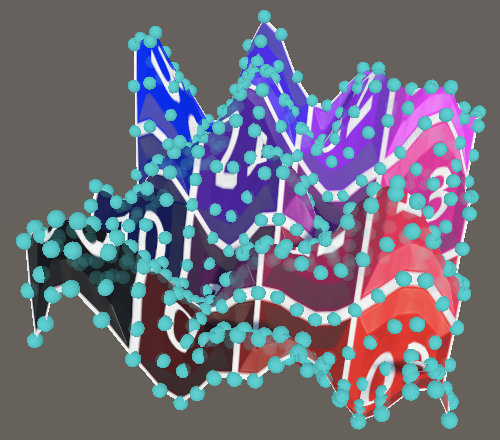

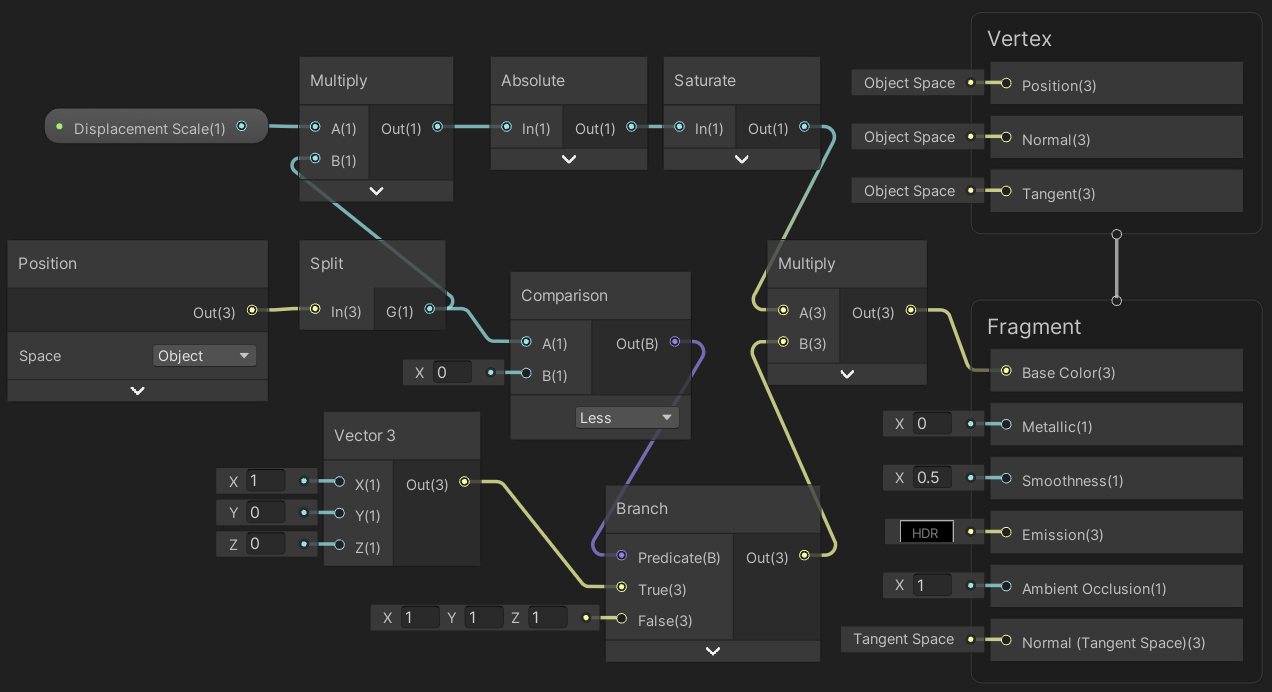

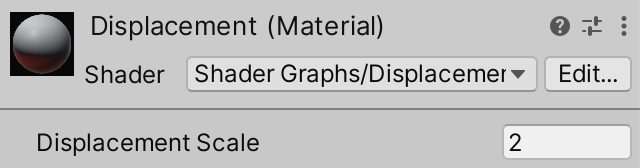

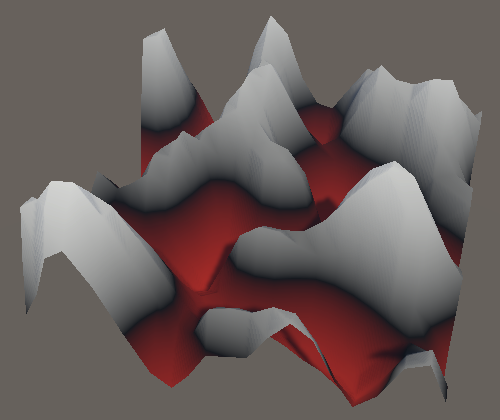

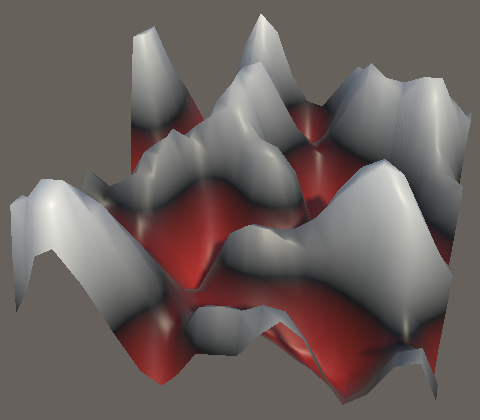

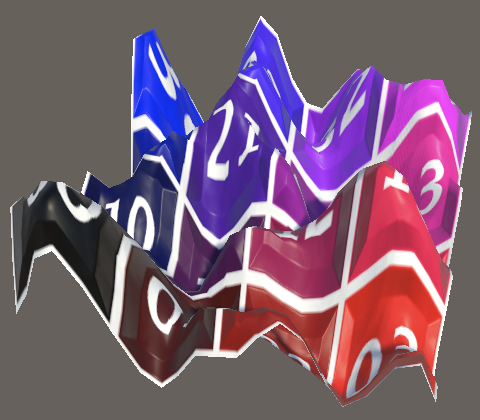

And let's also introduce a material option to colorize the mesh based on the displacement, similar to how we colored the noise visualization: black at zero elevation, increasing to white on the positive side and to red on the negative side, based on the object-space Y coordinate. Create a Displacement shader graph for this, with an extra displacement scale configuration and saturation of the final value so we can tune the strength of the coloration.

Add an option for it at the start of ProceduralSurface.MaterialMode, create a material, and add it to the materials array. I set the displacement scale to 2 so it reaches maximum strength at a displacement of ½.

public enum MaterialMode { Displacement, Flat, LatLonMap, CubeMap }

Normals and Tangents

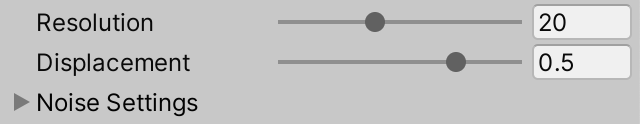

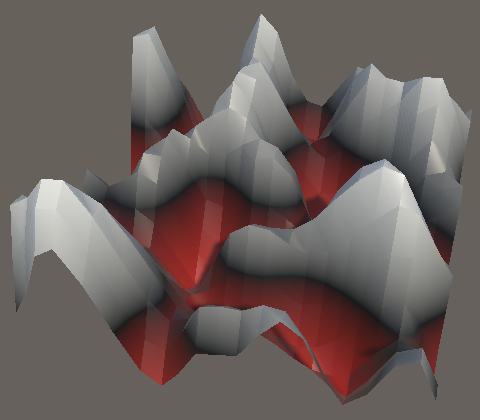

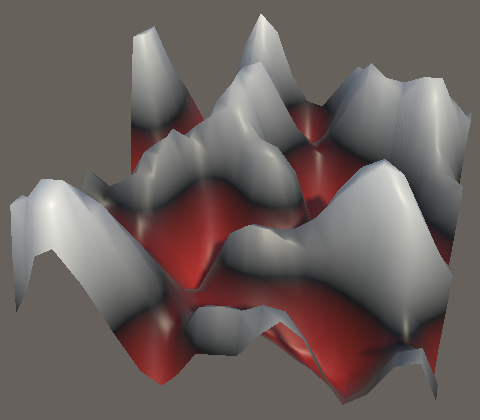

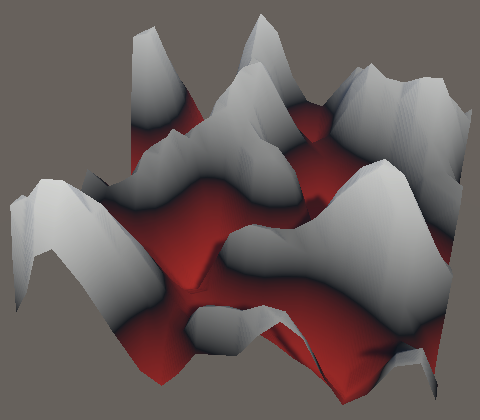

Although we now generate a surface with varying elevation it is still shaded as if it were flat, because we only modify vertex positions. The normal and tangent vectors still match the base shape, which is flat for the shared square grid. The simplest way to fix this is by using Unity's basic Mesh API to recalculate these vectors after modifying the mesh. We'll add support for this approach now and will add a different approach in the next tutorial.

Recalculating Normal Vectors

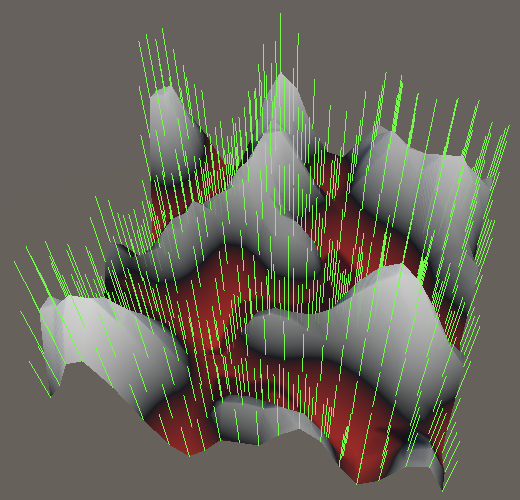

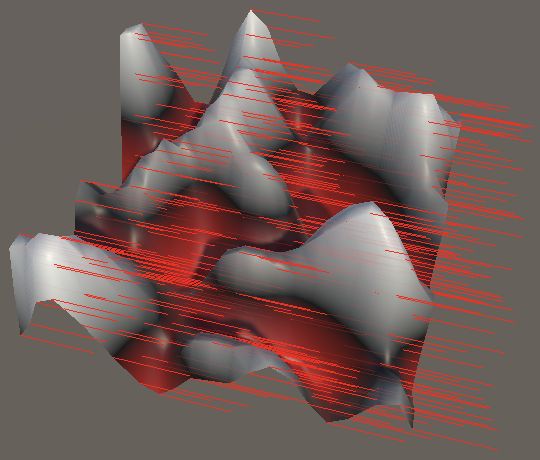

If we visualize the normal vectors it becomes very obvious that they match the base mesh shape. In the case of the shared square grid they all point straight up.

We can ask Unity to recalculate the normal vectors by invoking RecalculateNormals on the mesh. We have to do this in GenerateMesh after applying the mesh data.

Mesh.ApplyAndDisposeWritableMeshData(meshDataArray, mesh); mesh.RecalculateNormals();

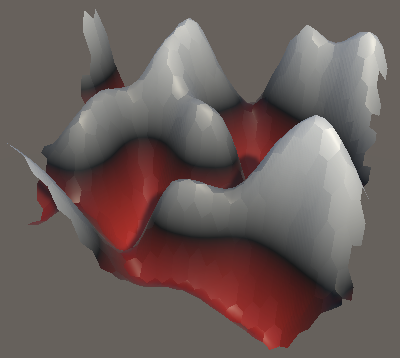

Unity determines the normal vectors by taking normalized cross products of the two triangle edge vectors adjacent to each vertex. When vertices are shared these normal vectors are averaged, which produces the appearance of a smooth surface. If vertices aren't shared then there will be visible seams along triangle edges. For example, the square grid surface only shares vertices per quad and not with adjacent quads, so it will have a faceted appearance.

Likewise for the hexagon grids, which only share vertices per hexagon.

Recalculating Tangent Vectors

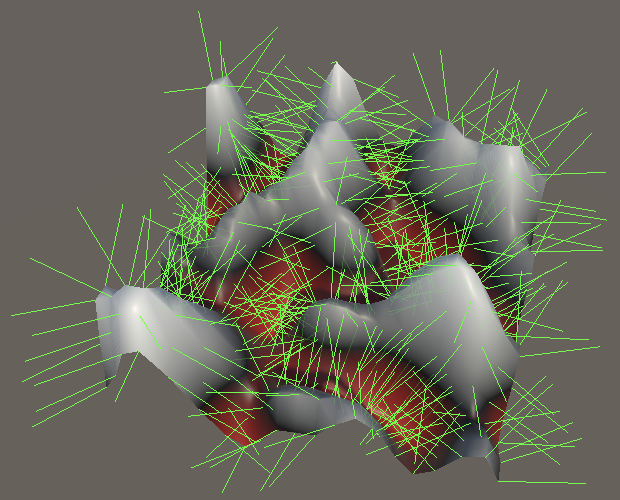

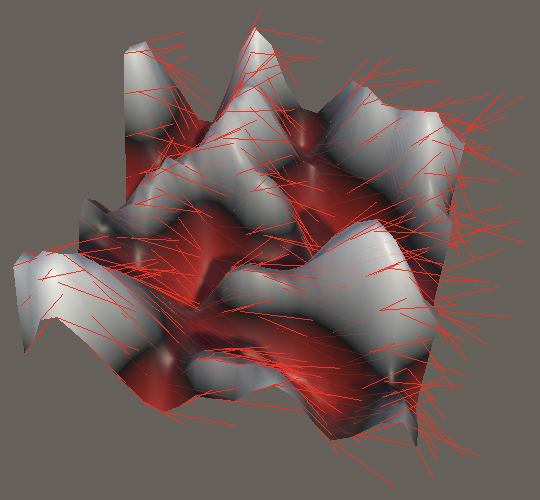

The tangent vectors have the same problem as the normal vectors.

These can be generated by invoking RecalculateTangents. This has to be done after recalculating the normals, because it uses the relationship between normal vectors and texture coordinates transformed to object space vectors to find the tangent vectors.

mesh.RecalculateNormals(); mesh.RecalculateTangents();

Optional Recalculation

We wrap up this tutorial by making the recalculation of normal and tangent vectors optional, to make comparison between with and without easier. Add toggle options for both.

[SerializeField]

MeshType meshType;

[SerializeField]

bool recalculateNormals, recalculateTangents;

…

void GenerateMesh () {

…

if (recalculateNormals) {

mesh.RecalculateNormals();

}

if (recalculateTangents) {

mesh.RecalculateTangents();

}

…

}

Whether recalculation of normals is on or off is immediately obvious.

It is less obvious for tangent vectors, and requires the use of a tangent-space normal map to be visible. Although the normal map might appear functional with incorrect tangent vectors, the result does not match the orientation of the surface.

The next tutorial is Simplex Derivatives.